Bot Attack

Inside the Bot Threat Landscape

For years, bots felt like harmless background activity. Small crawlers, SEO tools, uptime pings. Nothing alarming. But the internet looks different now. Automation has quietly become one of the most significant forces shaping digital traffic.

Here’s the shift: Nearly half of all internet traffic today comes from non-human sources.

And a surprising amount of that traffic is not friendly automation.

Bots don’t behave like humans. They don’t sleep, follow time zones, or wait for weekday traffic. They move at a steady pace, blend into real sessions through residential IPs, and often skip the UI to hit APIs directly.

A few changes made this explosion possible:

- AI made writing and adapting bots incredibly easy.

- Low-code bots turned scraping and credential testing into simple workflows.

- Botnets, proxies, and CAPTCHA solvers became cheap “as-a-service” tools.

This created a full spectrum of automation.

Helpful bots that keep your site running. Neutral bots that gather comparisons or prices. And malicious ones that test stolen credentials, scrape sensitive data, or scalp inventory the moment it becomes available.

Inside our Threat Lab, we often see something newer: bots stitched together like toolchains. Puppeteer drives navigation. Proxy networks handle identity. An LLM rewrites content or imitates a support message. These sessions look real enough that older WAF models can’t classify them.

The more you look, the clearer it becomes. Bots aren’t a secondary concern anymore. They’re part of your traffic.

And to understand how to defend against them, we first need to redefine what a bot attack actually is.

What Are Bot Attacks? (And Why Definitions Fall Short Today)

The textbook definition seems simple enough: a bot is software that performs actions online automatically, and a bot attack occurs when that automation is misused.

But this definition no longer helps you recognize an attack.

In real logs, an uptime checker and a credential stuffing bot can behave almost identically. Both repeat requests. Both hit endpoints consistently. Both look like “automation”. Intention isn’t visible in a single request, and traditional monitoring can’t distinguish between them.

The bot landscape has evolved dramatically. Bad bots now run full browsers, store cookies, mimic device fingerprints, and imitate human pauses or scroll patterns. They’ve become sophisticated enough to fool most conventional defenses.

Two widespread misconceptions make detection even harder:

- Bots don’t only target large enterprises. Small sites face daily automated attacks.

- CAPTCHA no longer works as a barrier. AI solvers and human solve-farms bypass most standard challenges in seconds.

To understand how quickly threats materialize, consider a ten-second window: A login API goes live. A distributed bot instantly begins sending stolen credential pairs. A few succeed. The bot detects those successes from tiny response differences, then follow-up API calls pull personal data or stored information. All within seconds.

So, here’s the updated definition for 2026: A bot attack is adaptive automation designed to extract value, bypass controls, or cause harm while mimicking legitimate user behavior.

This shift from simple scripts to intelligent mimicry changes everything about how organizations must defend themselves. To build effective defenses, you first need to understand what these attacks are actually trying to accomplish.

Types of Bot Attacks (and What They Actually Do to Businesses)

Every organization sees “bot traffic, but not all bot traffic behaves the same. Understanding bot attacks means looking at what each type aims to achieve: what it extracts, breaks, fakes, or manipulates.

Scraper Bots: The Silent Theft of Your Competitive Edge

These focus on copying what you’ve built. Product listings, prices, content, reviews, catalogues – anything that can be lifted, republished, or used to undercut you. Even a small scraper running through residential proxies can fire off hundreds of thousands of requests per hour, often aimed directly at your pricing or content APIs rather than the UI.

The impact shows up slowly: duplicated content in search results, margin pressure from competitors, or entire databases showing up in unauthorized AI training sets.

While we are at it, check out:

Credential Stuffing Bots: Turning Stolen Data into Account Access

While scrapers target your data, credential stuffing bots target your users. They run massive lists of leaked username-password combinations against your login API. When they succeed, the outcome is predictable: account takeovers, fraud, and resale of valid accounts. These campaigns overload login endpoints and inflate cloud bills long before they trigger an obvious alert.

The connection between these attack types matters. Scrapers often work in tandem with credential stuffing operations, first gathering user information and then using it to break into accounts.

DDoS Bots: Overwhelming Infrastructure to Create Chaos

Some attacks don’t aim for subtle extraction. DDoS bots overwhelm servers, CDNs, and API gateways with sheer volume. The cost appears in outages, bandwidth spikes, and emergency scaling. Many DDoS events serve as distractions, drawing defenders away while another automated attack moves quietly elsewhere.

Spam and Phishing Bots: Contaminating Your Data Streams

These bots don’t steal or break anything directly. Instead, they contaminate everything. They fill forms with junk, poison lead funnels, generate fake engagement, or push phishing messages created by AI text and voice models. A related variant, registration bots, floods your signup flow with counterfeit accounts that distort user analytics and drain promotional budgets.

The damage here compounds over time. Each fake registration or spam submission degrades the quality of your data, leading to poor business decisions based on polluted metrics.

Subscription Bots: Exploiting SaaS Business Models

Newer attacks specifically target modern SaaS economics. Subscription bots automate free trials or create fake signups using stolen cards. The damage hits customer acquisition metrics, promotional budgets, and chargeback rates. Many of these are sold as packaged “subscription bot attack services”, complete with tutorials and support.

AI-Powered Bots: The Conversational Threat

The newest category leverages large language models. These bots imitate human conversation, generate dynamic phishing flows, or carry out multi-step fraud that feels conversational. They trick behavioral systems because they “sound” human and adapt mid-interaction, making them far more challenging to detect than their predecessors.

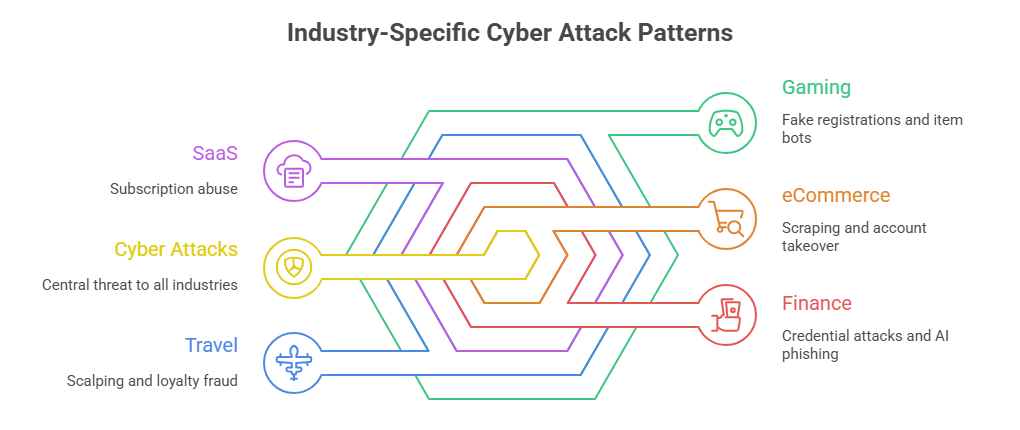

Industry Patterns: Where Each Attack Concentrates

When you map these attacks to industries, clear patterns emerge:

- eCommerce faces scraping and account takeover.

- Finance sees credential attacks and AI-driven phishing.

- SaaS platforms deal with subscription abuse.

- Travel sites encounter scalping and loyalty fraud.

- Gaming networks face fake registrations and item bots.

Once you recognize these categories, the traffic no longer looks random. You start seeing intent. And understanding intent reveals something even more important: these attacks don’t operate in isolation. They’re part of a larger, coordinated ecosystem explicitly built to evade detection.

Also read:

- Client Side Attacks in 2026: Types, Impact & How to Protect Your Business

- API Hacking Cheat Sheet: How Hackers Break APIs (and How to Stop Them)

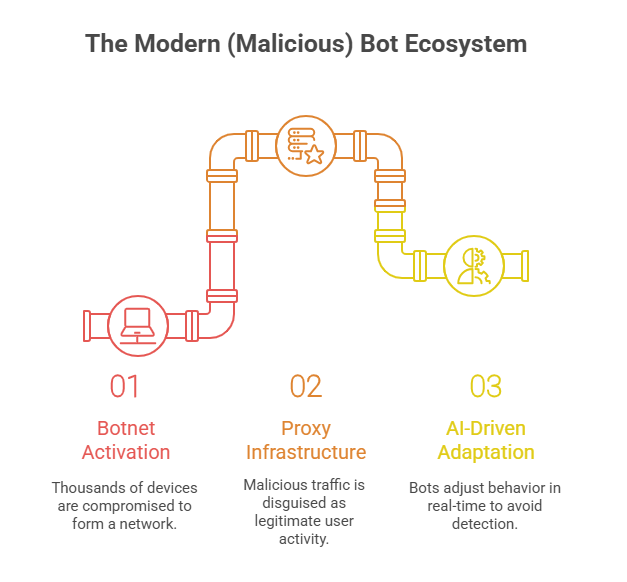

The Modern Bot Ecosystem: From Simple Scripts to Coordinated Systems

The last section explored what bot attacks accomplish. This section reveals how they work.

Contemporary bot attacks don’t originate from a single script running in someone’s basement. They emerge from coordinated systems built across devices, clouds, proxies, and AI models, all working together in a seamless flow.

The Foundation: Botnets

A single botnet can compromise thousands of everyday devices, such as smart TVs, cameras, and routers. These compromised devices form a distributed attack network. Many belong to regular households that never realize they’re contributing to automated traffic hitting your application at 3 AM.

The Disguise: Infrastructure Layers

Sophisticated proxy infrastructure makes malicious traffic indistinguishable from legitimate users. Proxy pools rotate IPs constantly. Residential IPs make traffic appear local and human. Cloud orchestration distributes requests across regions to avoid concentration. This layer exists purely to blend automated activity into your legitimate traffic streams.

The Intelligence: AI-Driven Adaptation

The newest evolution changes everything. Bots no longer execute predetermined actions; they adjust in real time:

- Throttling their requests when your system applies rate limits

- Changing scraping behavior when blocked

- Modifying cursor movements or typing patterns

- Idling, scrolling, or pausing to mimic authentic sessions

The most advanced systems use large language models to generate full conversation flows, adapt to prompts, and shape responses that feel natural. This makes AI-driven phishing and fraud far more convincing than anything from earlier eras.

Evolution Timeline: From Brute Force to Intelligence

The progression over the past decade tells a clear story:

- Early scripts: Basic HTTP flooding with minimal sophistication

- Middle phase: Proxy chaining and headless browsers

- Modern phase: CAPTCHA bypass, behavioral mimicry, residential IP masking

- Current wave: LLM-powered automation that imitates human intent

Automation didn’t just grow stronger. It developed the ability to learn and adapt.

The New Frontier: Attacks on AI Applications

LLM-backed products now face their own category of bot attacks. Automated prompt floods, high-volume synthetic inputs, and coordinated interaction flows aim to exhaust or manipulate AI models themselves.

Preventing these attacks requires specialized guardrails: behavioral rate limits, input validation tuned for LLM workloads, and anomaly scoring that looks beyond traditional API metrics.

These coordinated systems may seem chaotic, but they follow a predictable execution pattern that most defenders never recognize until it’s too late.

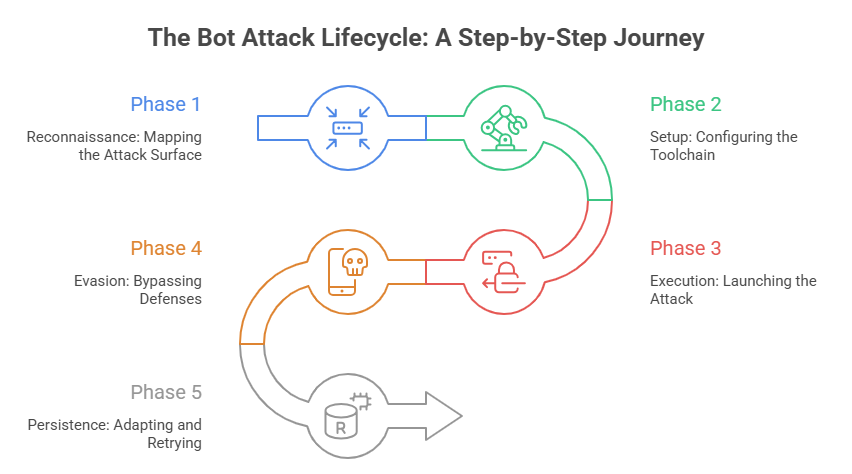

How Bot Attacks Work: Inside the Automation Loop

Bot attacks appear chaotic from the outside, but internally they follow a predictable pattern. Every major campaign, whether it’s scraping, credential stuffing, or subscription abuse, follows the same loop. Understanding this loop makes the entire ecosystem easier to read.

Phase 1: Reconnaissance

The attack begins before the first malicious request arrives. Bots map your attack surface by probing for login pages, open APIs, pricing endpoints, or anything exposing structured responses. They test your rate limits, check whether you use CAPTCHA, and identify what your WAF reacts to. This early fingerprinting reveals how much force attackers can apply without triggering alerts.

Phase 2: Setup

Once they understand your defenses, attackers configure their toolchain. This is where Selenium, Puppeteer, and Playwright come into play: full-browser automation that behaves like genuine user sessions. They select proxy pools (typically residential or mobile), load cookies, configure sessions, and integrate CAPTCHA solvers. Human solve-farms often operate in the background, clearing challenges in seconds whenever automation pauses.

Phase 3: Execution

This is the moment defenders typically notice something is wrong. Bots begin sending automated requests at scale: scraping pages, hammering login APIs with leaked credentials, or repeatedly hitting pricing endpoints. Everything runs fast, parallel, and coordinated.

A typical credential-stuffing attack unfolds like this: an eCommerce login page suddenly receives thousands of near-identical login attempts per minute, all from rotating IP addresses. A few credentials are valid. Those accounts are extracted instantly for fraudulent activity.

Phase 4: Evasion

Simple defenses collapse at this stage. Bots rotate IPs faster than logs can update. They randomize user-agents. They spoof device fingerprints. They inject behavioral noise (random mouse trails, scrolls, pauses) to mimic real sessions.

Our Threat Lab has observed this pattern consistently: rate limiting becomes ineffective when attackers swap thousands of IPs per minute. Speed doesn’t protect these operations; distribution does.

Phase 5: Persistence

When blocked, sophisticated bots don’t stop. They adapt. Some shift into “low and slow” mode, operating below detection thresholds. Some retry across different geographic regions. Some wait hours, then reappear using entirely different proxy networks. Botnets even morph techniques between campaigns, making each wave appear slightly different.

Reading the Pattern

Understanding this loop transforms how you interpret bot traffic. It’s not random noise. It’s a deliberate system designed to test, learn, evade, and repeat.

Catching these attacks early requires recognizing signals that human analysts rarely notice without the right tools and training.

How to Detect Bot Attacks: Early Signals and Tools

By the time a bot attack becomes obvious, damage has typically already occurred. Effective bot attack detection means spotting subtle footprints long before the attack peaks.

The key insight: bots reveal themselves first in behavior, not volume.

Behavioral Red Flags

These patterns rarely originate from human users:

- Identical or near-identical mouse paths across sessions

- Unusually low time spent on pages that should require more engagement

- Click rates are far above what real users can physically perform.

- Perfectly repeatable scroll sequences

Automated systems operate with consistency. Humans introduce natural variability.

Technical Indicators

Network and device data reveal hidden signals:

- Strange or outdated user-agent strings

- Abnormal headers that don’t match common browsers

- Rapid IP switching across geographically distant locations

- Device fingerprints with impossible combinations (wrong time zone, incompatible OS pairings)

Our Threat Lab has observed that user-agent anomalies often reveal more than IP-based blocking. Bots rotate IPs constantly, but many still reuse predictable user-agent templates that create detectable patterns.

Detection Methods That Work in Practice

Modern bot attack detection relies on layered telemetry rather than any single technique.

Traffic analytics dashboards identify rising bot-to-human ratios, login failure spikes, and anomaly clusters across your infrastructure.

Behavioral biometrics track typing cadence, cursor movement, and interaction flow. These are signals that bots imitate poorly, if at all.

Honeypot fields and JavaScript challenges exploit fundamental differences between bots and browsers. Bots fill invisible fields that humans never see. Bots mishandle JavaScript challenges that real browsers execute flawlessly.

How We Stopped a Coordinated Carding Attack

A major eCommerce platform noticed subtle anomalies during checkout: multiple failed payment attempts with rotating card numbers, coming from what appeared to be legitimate user sessions. Traditional security tools saw valid tokens and proper authentication, missing the pattern entirely.

AppSentinels’ business logic understanding flagged the behavior immediately. The API sequence was legitimate, but the velocity and variation weren’t. Device fingerprints rotated too frequently, payment methods changed with every attempt, and the checkout workflow was being executed far faster than human behavior allows.

Within hours, AppSentinels detected a coordinated carding attack. Bots were testing stolen credit card numbers through the platform’s payment flow. Automated enforcement blocked suspicious sequences before fraud could occur, protecting both the business and cardholders, while security teams received actionable alerts with full context into the attack pattern.

The Five Metrics Security Teams Should Monitor Weekly

These five indicators catch more early-stage attacks than almost any other combination:

- Bot-to-human traffic ratio

- Anomaly density

- Login failure spikes

- Proxy usage percentage

- User-agent anomaly rate

If even two of these shifts occur sharply within a short period, treat it as evidence that a bot campaign is building momentum.

The Core Principle

Effective bot attack detection isn’t about blocking traffic at the perimeter. It’s about understanding how automation behaves, so you recognize it long before it escalates into a full-scale problem.

Understanding detection is crucial, but the real question for business leaders is: what does it cost when these attacks succeed?

The Impact: Why Bot Attacks Hurt More Than Your Server Logs Show

Bot attacks don’t dramatically announce themselves. They look like traffic. Like interest. Like activity. But the damage spreads across the business in ways most teams never connect back to automated threats.

Financial Impact

Automated attacks target revenue streams before they stress infrastructure:

- Ad fraud drains budgets by generating fake impressions and clicks

- Scalper bots purchase limited inventory before legitimate customers can access it.

- High automated traffic inflates bandwidth and CDN costs.

- Chargebacks spike when subscription bots use stolen cards

Many teams only recognize the pattern after budgets begin behaving unpredictably.

Operational Impact

Automated traffic distorts the data organizations rely on for decision-making:

- Analytics inflate because synthetic sessions appear as real users

- Conversion funnels fail because teams optimize for non-human behavior.r

- Login pages slow down during credential-stuffing bursts.

- Promotional campaigns break when registration bots flood signup flows

This leads to a common realization: “We were building for bots without knowing it.”

Reputational Impact

Users experience the consequences before logs reveal the cause:

- Legitimate customers get locked out after credential attacks

- Support tickets increase from failed transactions

- Content scraping leads to duplicate pages and SEO penalties.

- Trust erodes when service quality suddenly degrades

Bot traffic doesn’t just affect infrastructure. It damages perception, loyalty, and long-term growth potential.

The Hidden Cost

The most insidious impact isn’t immediate. Misinterpreting bot traffic as genuine user behavior leads to months of misallocated marketing spend and flawed product decisions. Organizations optimize for patterns that don’t represent real customer needs, building features for phantom users while actual customers remain underserved.

This strategic misdirection represents the true cost of undetected bot activity. It’s not just about the immediate damage; it’s about the compounding effect of building your business strategy on contaminated data.

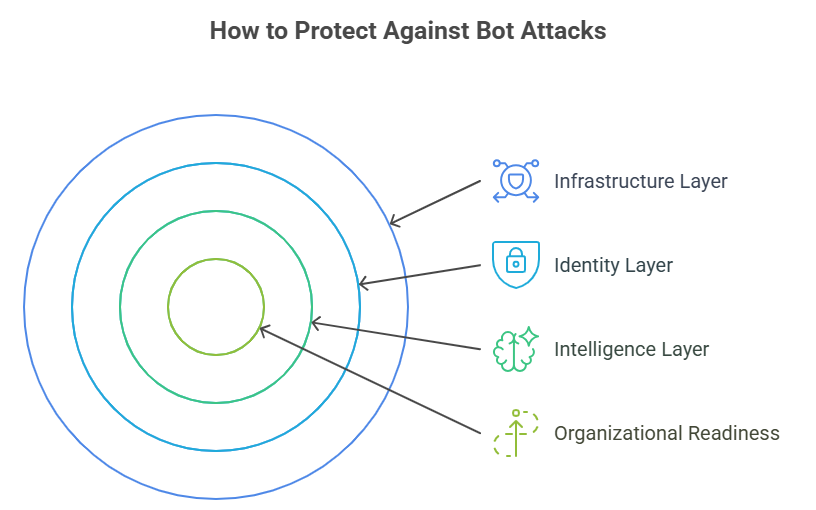

Defense Strategies & Best Practices (Bot Attack Protection That Actually Works)

Effective bot defense isn’t about blocking everything. It’s about layering protections to mitigate bot attacks without harming legitimate users.

1. Infrastructure Layer: The Foundation

Basic resilience starts at the edge:

- WAF rules filter obvious automated patterns

- Rate limiting slows high-volume floods.

- Geofencing removes traffic from high-risk regions.

- API throttles protect sensitive endpoints

These controls provide essential baseline protection, but bots rotate IPs too quickly for infrastructure-layer defenses to work in isolation.

2. Identity Layer: Protecting Accounts

Most bot attacks target authentication flows, making identity security critical:

- Multi-factor authentication turns stolen credentials into dead ends

- Credential hygiene policies reduce password reuse across services.

- Invisible verification challenges suspicious sessions without disrupting legitimate users

Strong identity checks stop the majority of account takeover attempts before they succeed.

3. Intelligence Layer: Where Modern Defense Lives

This adaptive layer distinguishes sophisticated platforms from basic defenses:

- AI-based anomaly scoring analyzes behavior patterns, not just traffic volume

- Continuous learning adjusts detection thresholds based on evolving attack patterns.

- Intent analysis identifies why a session is behaving a certain way, not just what it’s doing.

Rather than blocking aggressively and creating friction, intelligent systems escalate challenges only where evidence warrants intervention.

4. Organizational Readiness: The Overlooked Layer

Bot threats aren’t purely security problems. They affect product, marketing, and data teams equally:

- Regular audits reveal rising bot-to-human ratios before they impact decisions

- Red-team simulations expose defensive blind spots.

- Cross-functional reviews help detect anomalies early.

Organizations that treat bots as a business risk rather than just an IT issue respond far earlier and more effectively.

The Defense Progression

Our Threat Lab has observed that blocking bots outright often backfires. Layered throttling and behavioral scoring produce better outcomes for both security and user experience.

Think of protection as a progression:

Reactive → Proactive → Adaptive

WAF → Behavioral insights → AI-driven intent scoring

The higher you move up this progression, the more effective your defense becomes and the smoother the experience remains for legitimate users.

With these defensive principles established, the next question becomes: how do these strategies perform against real-world threats?

Real-World Case Studies and Threat Lab Insights

Modern bot attacks follow recognizable patterns. Understanding how defenses perform against actual threats helps organizations benchmark their own readiness.

Case Study: The 2016 Mirai Botnet Attack on Dyn

The Attack

On October 21, 2016, a massive distributed denial-of-service attack targeted Dyn, a major DNS provider that managed domain name resolution for numerous high-profile internet platforms. The attack began at 7 AM Eastern Time when cybercriminals commanded devices within the Mirai botnet to send tens of millions of requests to Dyn’s systems, overwhelming its infrastructure.

The attack prevented internet users from accessing major websites, including Twitter, Netflix, PayPal, Amazon, Reddit, GitHub, Spotify, and numerous others across the Northeastern United States and parts of Europe. The attack reached peak traffic rates of 1.2 terabits per second, making it one of the largest DDoS attacks ever recorded at that time.

The Botnet Behind It

Mirai malware targeted IoT devices like routers, DVRs, and web-enabled security cameras by exploiting devices with default factory usernames and passwords. Approximately 100,000 IoT devices participated in the attack. Device owners typically didn’t notice the hijacking, as the devices continued to function, though perhaps more slowly than usual.

The Impact

While Dyn mitigated the first wave and restored impacted platforms in approximately 2 hours, the incident continued throughout the day, as cybercriminals launched two additional attack waves in the afternoon and evening. Sony alone reported losses of $2.7 million due to the attack.

The broader implications were significant. The 2016 Mirai attack served as a crucial turning point for cybersecurity, emphasizing the dangers of the rapidly expanding IoT ecosystem and its potential weaponization.

Key Lessons

The Mirai attack revealed several critical vulnerabilities that remain relevant today:

- IoT Security Weakness: Millions of consumer devices shipped with default credentials that users never changed, creating an easily exploitable attack surface.

- Cascading Impact: Targeting the DNS infrastructure created widespread collateral damage affecting companies that weren’t the primary targets.

- Code Proliferation: Shortly after the attack, the Mirai source code was leaked online, allowing anyone with technical skills to build their own version of the malware. This led to dozens of variants, including Satori, Okiru, and Masuta.

- Attribution Difficulty: Multiple groups claimed responsibility, and while federal investigators eventually identified the creators as three college students targeting gaming platforms, the widespread impact appeared to be unintended collateral damage.

Liked this case study? Check out:

Common Scenarios Across Industries

Beyond this historic incident, specific patterns appear consistently across sectors:

eCommerce scraping: Retailers face catalog cloning via distributed residential IPs, leading to SEO degradation and competitive pricing pressure. Adaptive rate limiting combined with behavioral analysis significantly reduces scraping volume while maintaining legitimate customer access.

SaaS subscription abuse: Platforms encounter coordinated exploitation of free trials using stolen payment methods. Device fingerprinting and payment velocity analysis catch these patterns before significant chargeback damage occurs.

Travel and ticketing: Scalper bots target high-demand inventory by mimicking user sessions. Intent analysis – distinguishing genuine purchase behavior from automated patterns – prevents inventory hoarding.

Emerging Trend: AI-Augmented Attack Tooling

Threat Lab analysis reveals a significant shift in attacker methodology. Approximately one in three bot campaigns now incorporates LLM-generated code pulled from public repositories. Attackers use AI to rewrite scripts, randomize behavior patterns, and mimic human interaction flows with increasing sophistication.

This evolution requires defensive systems that can recognize not just known attack patterns, but the behavioral signatures of adaptive automation itself.

Measuring Defense Effectiveness

The most convincing proof of defensive success lies in measurable outcomes:

- Malicious traffic percentage

- Scraper request volume

- Login failure density

- Infrastructure cost savings post-mitigation

Organizations typically see immediate improvements across these metrics once they deploy adaptive, behavior-driven defenses. The Mirai incident demonstrates what happens when basic security hygiene fails at scale, while modern defenses show how behavioral analysis and intelligent automation can prevent similar cascading failures.

Understanding these real-world patterns provides essential context for the legal and compliance considerations that increasingly shape bot defense strategies.

Legal and Compliance Considerations

Bot attacks raise more than security questions. They create regulatory, contractual, and liability exposure that organizations often discover only after an incident occurs.

Where Regulations Stand

Different regions classify automated misuse differently, but the direction is clear: regulators expect companies to protect personal data against bots just as they would against any other threat.

GDPR (EU): If a bot extracts or accesses personal data without consent, it may constitute unauthorized processing, triggering breach notifications and penalties.

CCPA (California): Credential stuffing and scraping that lead to unauthorized access can constitute a privacy violation, with associated statutory fines.

India’s DPDP Act: This presents particular complexity. DPDP strongly focuses on consent, and automated scraping sits in a grey area. The question policymakers are wrestling with is straightforward: Does scraping publicly visible data qualify as “data misuse” if it is later aggregated or used in ways the user never consented to? The global trend says yes, primarily when the data is used for AI training or profiling.

Terms of Service and Enforceability

To reduce ambiguity, companies are increasingly making their ToS explicit about automation. Strong ToS language typically includes:

- Clear prohibition of scraping or automated access without permission

- Restrictions on data reuse, aggregation, or resale

- Consequences for violations, including bans or legal escalation

These clauses help establish legal standing if bot operators are identified.

Cyber Insurance and Bot-Related Claims

Not all bot attacks are covered equally. Most cyber insurance policies cover breach response, downtime losses, and some fraud costs. Still, they often exclude automated scraping, may require proof of reasonable bot protection, and some differentiate between malicious automation and high-volume traffic.

The bottom line: if you want coverage, you need to demonstrate you were taking bot threats seriously.

Responsibilities of Data Controllers

Regardless of region, expectations are converging around core requirements: prevent unauthorized automated access, protect personal data from automated harvesting, log suspicious activity, conduct regular audits, and ensure vendors follow the same standards.

Modern bot defense platforms align security controls with these privacy obligations, making compliance and protection reinforcing rather than competing priorities.

How AppSentinels Stops Bot Attacks Others Miss

Traditional tools can’t distinguish legitimate users from sophisticated bots. WAFs see valid API calls. Rate limiters see traffic spikes. Neither understands if a checkout flow is running at machine speed or if a login pattern is humanly impossible.

AppSentinels does.

Business Logic Detection

Our AI engine learns your application’s normal behavior: user session lifecycles, API sequences, role patterns, and complete workflows. This context separates legitimate traffic from threats.

When e-commerce carding bots hit, traditional tools saw authenticated API calls. AppSentinels saw payment methods rotating too fast, checkouts executing at machine speed, and device fingerprints cycling in impossible patterns.

Protection That Understands Your Application

- Account Takeover: Detects credential stuffing through login velocity and behavioral consistency analysis

- Carding Prevention: Stops payment testing by recognizing abnormal checkout behavior patterns

- Scraping Defense: Identifies data extraction through navigation patterns. Real users browse inconsistently, scrapers follow predictable paths.

- Automated Attacks: Defends against DDoS, registration bots, and AI-powered abuse by understanding legitimate user journeys

Runtime enforcement blocks threats automatically, challenges anomalous behavior, or alerts teams, adapting as attacks evolve.

Enterprise-Ready

Protects 100+ billion API calls monthly. Deploys in hours with 50+ integrations. Scales without latency across on-premise, cloud, or hybrid environments.

The bots attacking your application understand your business logic. Your defenses should, too.

To learn more:

- How AppSentinels Addresses the UAE API First Guidelines for Robust API Management and Security

- Appsentinels Ensuring Adherence to SEBI CSCRF API Security Standards

Frequently Asked Questions (FAQs)

What’s the difference between good and bad bots?

Good bots index content, monitor uptime, and help systems run smoothly. Bad bots scrape data, steal accounts, commit fraud, or overload infrastructure.

How can I detect bots without harming the user experience?

Use behavioral analytics, invisible checks, device intelligence, and adaptive challenges instead of forcing CAPTCHA on everyone. Modern platforms analyze intent and escalate verification only when anomalies appear.

Why are CAPTCHA no longer enough?

Modern bots use AI-based CAPTCHA solvers, human solve-farms, and headless browsers that mimic real behavior. This makes CAPTCHA-only defense outdated and ineffective against sophisticated attacks.

What’s the average cost of a bot attack?

The financial impact varies widely based on attack type and industry. DDoS attacks can cost $20,000 to $40,000 per hour in downtime. Credential-stuffing campaigns that lead to account takeovers average $4 million per incident, including fraud losses, response costs, and reputational damage. Scraping attacks create a competitive disadvantage that’s difficult to quantify but compounds over time.

Can bot attacks target small businesses?

Absolutely. The misconception that bots only target large enterprises is dangerous. Small sites face daily automated attacks. Attackers often target smaller organizations specifically because they assume security will be weaker. Payment processors, SaaS startups, and eCommerce sites of any size are common targets.

How long does it take to detect a bot attack?

Traditional security tools often take hours or days to identify bot campaigns. By that time, significant damage had occurred. Modern AI-driven platforms can detect anomalous patterns within minutes by analyzing behavioral signals rather than waiting for volume thresholds to be crossed.

What’s the difference between bot management and bot mitigation?

Bot management is the broader strategy for identifying, classifying, and controlling all automated traffic (good and bad). Bot mitigation specifically focuses on stopping malicious bots while allowing legitimate automation to function. Effective platforms do both: they manage the whole bot ecosystem while mitigating threats in real time.

How does AppSentinels integrate with enterprise security tools?

Through APIs, connectors, and event streams that plug into WAF/CDN layers, SIEM dashboards, incident response pipelines, and IAM systems. The result is unified visibility across all user touchpoints, with enforcement enabled by existing infrastructure.

Building Bot Resilience That Scales

Bot attacks aren’t slowing down. Automation grows faster, smarter, and cheaper each year. The organizations that stay ahead don’t just react to threats; they build defenses that evolve as quickly as the attacks themselves.

The gap between reactive and proactive organizations comes down to three capabilities:

Visibility: You can’t defend what you can’t see. Comprehensive traffic analysis reveals the proper ratio of human to automated sessions across your infrastructure.

Intelligence: Volume-based detection fails against distributed attacks. Behavioral analysis and intent scoring catch threats that traditional tools miss entirely.

Adaptation: Static defenses become obsolete within months. AI-driven systems that learn from attack patterns and adjust thresholds automatically maintain effectiveness as threats evolve.

The Path Forward

Start with an honest assessment. Audit your current traffic to understand your bot exposure. Implement behavioral analytics that look beyond IP addresses and rate limits. Train teams across security, engineering, product, and data functions to recognize bot patterns and their business impact.

Bot defense isn’t a security project. It’s a business imperative that protects revenue, preserves trust, and ensures the data driving your decisions actually represents your real customers.

The question isn’t whether you’ll face sophisticated bot attacks. The question is whether you’ll see them coming.